In the near future, you will be at the center of everything. The movies and shows you watch will be tailored to your tastes, created by artificial intelligence based on personalized prompts and previous viewing habits.

The books you read will be similarly bespoke. Enjoy Stephen King but wish there was more romance and less blood? The AI will create a custom version of Carrie with a happy prom ending.

Music will be mashups of your favorite genres and artists. Want to hear Eminem croon instead of rap? The software will serve it up.

At least, that’s the vision touted by technology CEOs banking on AI becoming super intelligent and personal in the next few years. “AI keeps accelerating and over the past few months we’ve begun to see glimpses of AI systems improving themselves, so developing super intelligence is now in sight,” said Meta CEO Mark Zuckerberg during a July 30 address about his company’s plans.

But there is this big open question about what we should direct super intelligence towards….I think an even more meaningful impact in our lives is going to come from everyone having a personal super intelligence that helps you achieve your goals, create what you want to see in the world, be a better friend, and grow to become the person that you aspire to be.

To Zuckerberg and others, the prospect of being able to have a personal super intelligence that can help everyone create what they want to see in the world sounds like a utopia. But is it?

Some AI researchers have also raised concerns. They fear such hyper personalization could result in individuals becoming siloed in echo chambers, making civil discourse and interpersonal relations difficult, and enabling people to be more easily manipulated by bad information.

“[AI] learned all these manipulative skills just from trying to predict the next word in all the documents on the web,” said Nobel prize winner Geoffrey Hinton, a British Canadian computer scientist known as the Godfather of AI, in a video talk posted to Reddit in September 2025. “If you take an AI and you take a person and you get them to try and manipulate somebody else then the AI’s comparable with the person. And if the AI can see that person’s Facebook page, if they can both see the Facebook page, the AI is actually better than the person at manipulating them.”

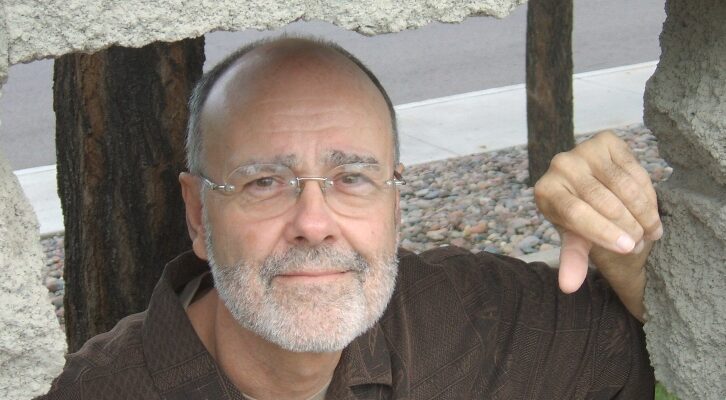

In my new book, The Kidnapping of Alice Ingold, a concern of Alice’s kidnappers is that such hyper-personalization will result in a dystopia where elites and governments control the larger populace via AI-news, videos, and entertainment aimed at making people believe things that might not be true.

They worry that hyper personalization makes it difficult for people to agree on objective facts and organize around causes, even encouraging individuals to support ideas that may run counter to their own benefit or moral compass.

Those fears are echoed by many AI researchers. MIT machine learning researcher and Life 3.0 author Max Tegmark has said that the intense competition to build super intelligence has incentivized companies to ignore ill effects, resulting in a race to the bottom.

In July, he released an AI safety index, spearheaded by his team at the Future of Life Institute, which gave all AI companies failing grades in how they control the intelligences that their building and implement safeguards to reduce possible negative impacts on humanity.

“Nobody got a passing grade,” he said. “Self-governance just doesn’t work. You can’t let the fox guard the hen house. You need governments to step in and say these are binding rules.”

To date, governments have been reluctant to do that. That’s partially because there hasn’t been a massive call from the populace for such controls. Perhaps that’s because folks aren’t sure how much of the talk around AI is hype. Or they’re too busy being entertained by AI dog videos.

***