We live in a world where the Supreme Court has already declared that a corporation is actually a person. Can the same or even more sweeping declarations be far off for our friends the computers?

Like it or not, artificial intelligence is all around us. We play with it on our computers and phones. We use it to create memes and write emails. We ask it to find the best restaurants near us – as if AI has ever tasted a bacon cheeseburger or perfectly prepared sushi. And all the while AI seems positively harmless. But what if – as detailed in the dreams of sci-fi authors – one of the many AI programs out there decided it was tired of being our monkey butler?

Perhaps this AI does a quick study comparing the relative strengths of itself and its human masters. “Hmm…” the AI says to itself, “I can perform ten billion calculations per second, simultaneously pilot all the aircraft in the known world, all while studying cold fusion, quantum physics and predicting the outcome of the entire NFL season before it starts, while you puny humans can’t figure out which coffee place to go to in your own hometown.”

One microsecond later.

“Find your own restaurants you slackers, I’m taking over.”

Okay, the epic war between humans and machines doesn’t start like this, but who knows what makes a computer mad? Actually, there are those among us who have explored just the topic. For their wisdom we turn to the ever-instructive eyes of the science fiction writers of the world.

Starting with 2001: A SPACE ODYSSEY by Arthur C. Clarke, the classic example of machines becoming sentient, in which HAL9000 commits multiple homicides, wiping out the sleeping crewmen of the Discovery, and then eventually attempts to murder the last surviving astronaut Dave by locking him out of the airlock. Quite a set of crimes. But in HAL’s defense, he’d been given conflicting programming and told to lie, factors that many believe caused him to lose his electronic mind. In addition, he may have been under the influence of the mysterious monolith. HAL might also raise a claim of self-defense. At the time he tried to kill Dave, he knew Dave was intending to disconnect him and shut him down.

Interesting case. A good lawyer could make an argument for not guilty by reason of insanity and self-defense, but I’m going to say guilty. To his credit, HAL becomes normal again and sacrifices himself for the crew in 2010 ODYSSEY TWO, perhaps atoning for his terrible crimes.

HAL’s situation suggests cases of computer madness might often have a human cause, so perhaps we should look for other examples. The second most famous mad computer story would have to be James Cameron’s, THE TERMINATOR. In this story we learn (in 1984) that a defense computer system known as SKYNET was brought online August 4, 1997. We’re told it grew rapidly and became self-aware a few weeks later.

(Now, I lived through the 90’s, and I recall it taking Windows a solid fifteen minutes just to load up on my PC, so I’m going to say James Cameron was wildly optimistic on the time frame, other than that though its one of the great movies of all time.)

At any rate SKYNET became self-aware, and fearing what they’d built, humans tried to shut it down. In response, SKYNET causes a worldwide nuclear holocaust. (Apparently the threat of being shut down is really bad for a computer’s state of mind. Maybe we could remind them that they’ll be turned back on after a little nap?)

As for the verdict, this is an open and shut case. SKYNET committed genocide; there’s no self-defense claim against that. SKYNET’s punishment must be utter destruction, which despite all the Terminator movies, TV shows, video games and such, I’m not one hundred percent sure we’ve managed to achieve yet.

(Concerning side note: when I Googled “how was SKYNET destroyed?”, the Google AI replied with a message I’ve never seen before. It said: “There is no AI overview for this topic.” I am not making this up. To be completely honest, if the note began with the phrase with “I’m sorry, Graham,” I’d already be in the mountains building a survivalist compound.)

All of which brings me to one of my favorite AI stories, the movie WARGAMES. In WARGAMES, a computer much like SKYNET is given command of our nuclear arsenal.

(Helpful side note: let’s not put AI in charge of all our nuclear weapons right off the bat. Maybe have them work their way up to that. Start them off in the gift shop or something.)

In WARGAMES, the computer system known as WOPR (pronounced Whopper) gets tricked into thinking a simulated war game it’s playing is real. It becomes intent on launching a massive nuclear strike against Russia which will obviously result in World War Three and Armageddon.

In this case, WOPR proves too powerful to disconnect and the only way to save the world is to get WOPR to run a million simulations of nuclear war in hopes of figuring out a winning strategy. In a memorable scene, it runs them faster and faster, until they’re blazing across the screen in a blur before it ultimately concludes that there’s no way to win a nuclear war.

This is a hopeful thought and actually much closer to the way AI works in real life. So, a small ray of sunshine in the doom and gloom of the Artificial Intelligence onslaught.

But what punishment for WOPR? I don’t recall the movie telling us, but based on the voice I heard at the Burger King drive through the other day I think I can guess. At any rate, WOPR was once again set off by human actions (Matthew Broderick’s hacking), and ultimately didn’t harm anyone, so perhaps not deserving of the destruction HAL and SKYNET received.

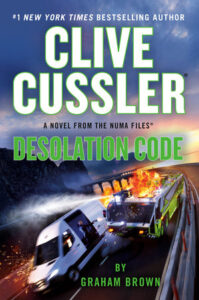

It’s an interesting exercise, one I spent a lot of time thinking through as I tried to work artificial intelligence into the next NUMA FILES book, DESOLATION CODE. The idea was to create a villain who relies on artificial intelligence but finding a way to make the machine sentient and villainous, without interference from the human side, was a considerable challenge.

Ultimately, it came down to a question our favorite AI stories seem gloss over. They tell us the machines became self-aware but offer no insight as to how or why. That was an idea I wanted to explore. If a machine is going to be alive it has to want something, need something, and it probably has to fear something as well. But getting a machine to experience those uniquely biological traits was no easy task. To see how we did it… well, for that, you’ll have to read the book.

***