You know the shpiel. Artificial intelligence will solve all our problems. It will compute our calculus, it will write our emails, it will give us the most efficient recipe for banana bread, etc. But humans will still be around, one hopes, and so, humans being humans, there will still be greed and jealousy and hate and therefore there will be crime. But surely AI will solve that too!

But why use the future tense? For over a decade now, AI has been deployed in the U.S. to prevent crime. Let me introduce you to COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), a proprietary bit of software licensed by Equivant (a subsidiary of billion-dollar conglomerate Constellation Software). COMPAS, you’ll be delighted to learn, is used by courts across the country to measure recidivism risk in determining pre-trial and parole release. Miraculous! True, upon investigation, COMPAS does tend to demonstrate a pronounced racial bias, insomuch that “blacks are almost twice as likely as whites to be labeled a higher risk but not actually re-offend”, but a further study demonstrated that this bias is not limited to race but also gender, so…yay?

But why fixate on those who have already been arrested? Let’s see how helpful AI is (again, notice the present tense) in using facial recognition software to identify criminals. You know facial recognition software. You probably used it to login to the device you’re using to read this missive. Did you know that the police regularly use it to identify suspects? Ask Robert Williams. Detroit investigators engaged this software to compare grainy footage from a security camera to DMV photographs and positively identify him as the culprit in a robbery. True, this irrefutable evidence was later refuted at an evidentiary hearing after Mr. Williams had been held for 30 hours, but it’s the thought that counts, right?

But that’s a large part of the problem. There is no “thought” at all. Artificial intelligence is, lest we forget, not intelligent at all, at least by any human measurement. The Turing Test, for example, does not measure a computer’s ability to think but instead its ability to fool humans through the simulation of thought. This is not to say that AI can’t be an assistive tool. Not only has this been proven true in real-life crime investigation but also in simulation-life crime novels, be it Holly Gibney’s ease with Google or Kat Frank’s facility with holograms. The problem occurs when we rely on its “thinking” to replace our thinking. If you still don’t believe me, ask the lawyer who used ChatGPT to write his entire brief, which the AI riddled with inaccuracies (or, in preferred parlance, “hallucinations”).

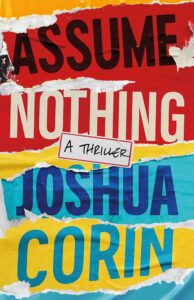

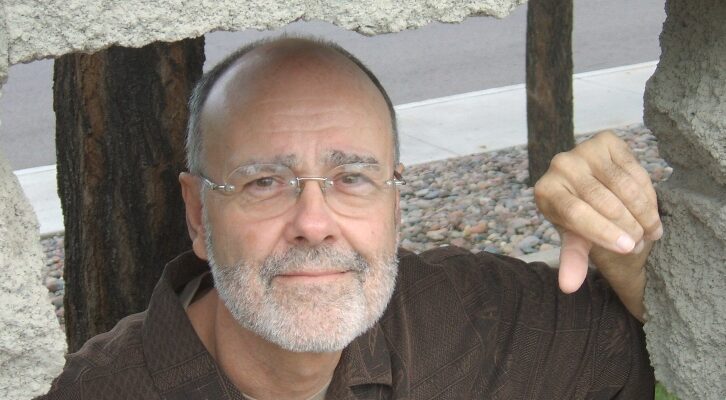

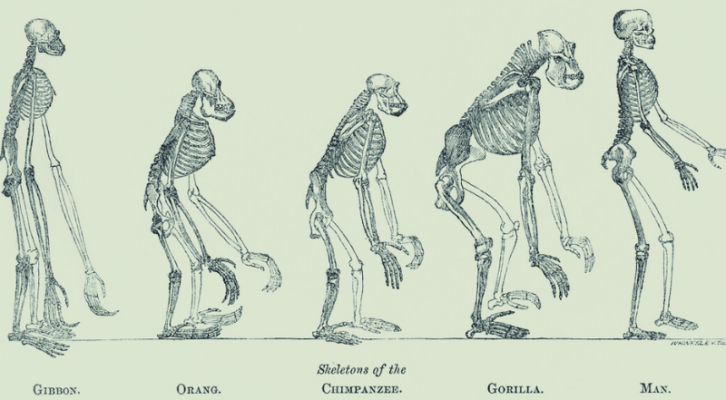

If I sound like a Luddite raising his fist against nascent technology, well, blame my latest novel, Assume Nothing, and the convictions held and espoused by one of its main characters, Alik Lisser. Lisser is my homage to the genius detectives of the 19th and 20th centuries such as Sherlock Holmes and Nero Wolfe, who applied their vast, encyclopedic knowledge to solving cases. He rails against law enforcement’s overreliance on computers to do the reasoning which, he believes, can only be done by a human mind working in tandem with human intuition. And my novel takes place in 1996! Though let’s not assume Alik is completely selfless in his motivations. He is, after all, only human.

***