There was once a Golden Age when mystery and thriller writers had the simplest of plot devices in their toolkit: you can’t reach the person you need to warn most, or summon help. The phone is dead. The line is cut. Or everyone was cut off in an Agatha Christie-style country house, or on a train hurtling across Europe, or on a cruise down the Nile.

Then email, the internet, laptops and cell phones arrived.

If there was ever a series that showcased this arrival, it was the X-Files. The first season was set in 1993, and even though the pilot episode has the FBI agents stuck in a town where the power has gone down so they can’t call for help, they were soon whipping out phones the size of bricks to speak to each other, and sending emails to their bosses at the FBI from the field.

All credit to the X-Files writing room, because this wasn’t an easy time to be a writer. For a while, there was a trend of setting stories prior to the invention of the cell phone in order to steer around it, or finding other convenient ways to shut people off from the world. Fact is, there’s a glorious tension in not being able to communicate, or from being trapped with no way of summoning help. We still see this today. Tom Hindle deliberately resurrects the trope in his recent Death in the Arctic, set on a luxury airship, achieving both the Golden Age era isolation and also neatly giving a reason why there’s no working cell phones or internet access.

But for the most part, writers pivoted. If the phone couldn’t be taken away, it could be used. The 2010s crime story embraced technology as a partner: CCTV on every corner, DNA that sprang leads from a years‑cold cigarette butt, cell towers and Wi‑Fi logs, and eventually “Find My” or smartwatch records narrowing timelines and even heartbeats to the minute. Investigations became montages of analysts at screens, magically enhancing blurry images.

But the ground is moving again, and it’s moving faster. Now, it’s not just the detectives using tech, it’s the criminals too. The next decade of crime fiction will be about mistrust—of video and images, of logs, of everything written down in digital ink.

In the past, crimes were hard to solve because there was too little information. In the future, they may be just as hard because there’s too much, and we can’t tell what’s real.Once, a grainy camera helped solve crimes. Now we have cameras that see crisply and are in everyone’s hands, with anything remotely unusual uploaded to social media sites in an instant. We also have AI models that can forge footage that looks the same—a lot of people were fooled only recently by fake nighttime CCTV footage of bunnies jumping on a trampoline on TikTok and Instagram. If we can’t believe bunnies jumping on trampolines are true, what can we believe in?

In the past, crimes were hard to solve because there was too little information. In the future, they may be just as hard because there’s too much, and we can’t tell what’s real. Tech can now be used to manufacture innocence, from deepfakes and AI-generated alibis to digital time-stamp forgeries. This isn’t just about plot mechanics, but how crime writers must now think like hackers, not just gumshoes.

What does this do to things like alibis? Phones made alibis precise. Your handset snitched on you. But soon, even as the victim is being tied up, the killer’s calendar assistant is rescheduling meetings. An AI receptionist answers calls in their voice. A lifelike avatar sits in a video conference, blinking, nodding, smiling on cue—with just enough delay to blame on bandwidth. By the time the body’s found, three different systems will swear the suspect was across town.

Right now, AI in education is failing students and potentially ruining their degrees because universities use inadequate tools to verify if an essay was written by a human or AI. These tools seem to err on the side of AI, because most essays are deliberately written with a neutral, academic tone. It’s just not good enough to be able to tell, so it flags things it shouldn’t. Tomorrow’s fictional police officer might also have tech two steps behind the criminal. Their AI detectors might flag alibi evidence in the same way: this is definitely fake, when it’s actually real.

On the page, it’s going to be simpler: the detective cannot trust the things that used to be taken for granted. Once reliable footage will now be unreliable. A voice record could be generated. The culprit didn’t disable the cameras, they hacked the security company and taught the cameras to misrecognize them as a plant.

From a craft perspective, this is both a headache and a gift. The headache is obvious: many go‑to beats are as extinct as the cell phone made the ‘can’t warn them’ plot device.

The gift is that crime writing returns to what it does best: people. In a world of synthetic overlays, the stubbornly analog detail becomes powerful again. It’ll be the time of new Sherlock Holmes, spotting the smallest habits and making smart deductions. The line cook’s burn scar is too new to fit the alibi; the suspect arranges cutlery left‑handed but signs hotel receipts right‑handed. Technology remains everywhere, but as misdirection—as noise—rather than as a magic key.

Crime fiction writers will have to make a choice. They can set stories in the recent past, where the ecosystem is familiar and the evidence has that pre-AI tang of solidity. They can strip technology away—a luxury airship, remote island, or the cabin in the woods.

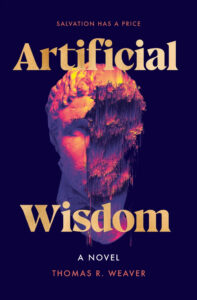

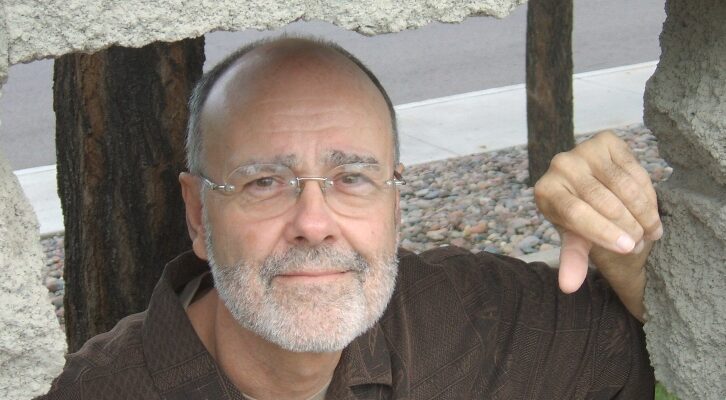

Or they can lean in. This is what I chose to do in Artificial Wisdom, a futuristic murder mystery set a quarter century ahead. It’s a world where technology gets in the way of the crime. Cleaning bots tidy the evidence before the police even get there, and the question of what is really true and what can be trusted is a constant theme. This became a fun problem to try and solve, having the protagonist trip over the technology, while also leveraging it. In my view? That’s something we’ll see a lot of in the decade ahead.

***